Although wireless communication, especially on the frontier-like ISM band, is fraught with hidden dangers, the vendors working together in the IEEE and Wi-Fi Alliance have managed to successfully shepherd wireless LAN technology from humble beginnings to the reasonably reliable high performance we enjoy today.

In the 1980s, even before connectivity to the Internet became commonplace, people realized that connecting a group of computers together in a local area network (LAN) made those computers much more useful. Any user could then print to shared printers, store files on file servers, send electronic mail, and more. A decade later, the Internet revolution swept the world and LANs became the on-ramp to the information superhighway. The LAN technology of choice was almost universally Ethernet, which is terrific apart from one big downside: those pesky wires.

In the late 1990s, the Institute of Electrical and Electronics Engineers (IEEE) solved that problem with their 802.11 standard, which specified a protocol for creating wireless LANs. If ever the expression "easier said than done" applied, it was here. Huge challenges have been overcome in the past 15 years to get us to the point where reasonably reliable, fast, and secure wireless LAN equipment can today be deployed by anyone, and where every laptop comes with built-in Wi-Fi. But overcome they were—and here's how.

Early Aloha

The journey started back in the early 1970s. The University of Hawaii had facilities scattered around different islands, but the computers were located at the main campus in Honolulu. Back then, computers weren't all that portable, but it was still possible to connect to those computers from remote locations by way of a terminal and a telephone connection at the blazing speed of 300 to 1200 bits per second. But the telephone connection was both slow and unreliable.

A small group of networking pioneers led by Norman Abramson felt that they could design a better system to connect their remote terminals to the university's central computing facilities. The basic idea, later developed into "AlohaNET," was to use radio communications to transmit the data from the terminals on the remote islands to the central computers and back again. In those days, the well-established approach to sharing radio resources among several stations was to divide the channel either into time slots or into frequency bands, then assign a slot or band to each of the stations. (These two approaches are called time division multiple access [TDMA] and frequency division multiple access [FDMA], respectively.)

Obviously, dividing the initial channel into smaller, fixed-size slots or channels results in several lower-speed channels, so the AlohaNET creators came up with a different system to share the radio bandwidth. AlohaNET was designed with only two high-speed UHF channels: one downlink (from Honolulu) and one uplink (to Honolulu). The uplink channel was to be shared by all the remote locations to transmit to Honolulu. To avoid slicing and dicing into smaller slots or channels, the full channel capacity was available to everyone. But this created the possibility that two remote stations transmit at the same time, making both transmissions impossible to decode in Honolulu. Transmissions might fail, just like any surfer might fall off her board while riding a wave. But hey, nothing prevents her from trying again. This was the fundamental, ground-breaking advance of AlohaNET, reused in all members of the family of protocols collectively known as "random access protocols."

The random access approach implemented in the AlohaNET represented a paradigm shift from a voice network approach to a data network. The traditional channel sharing techniques (FDMA and TDMA) implied the reservation of a low speed channel for every user. That low speed was enough for voice, and the fact that the channel was reserved was certainly convenient; it prevented the voice call from being abruptly interrupted.

But terminal traffic to the central computers presented very different requirements. For one thing, terminal traffic is bursty. The user issues a command, waits for the computer to process the command, and looks at the data received while pondering a further command. This pattern includes both long silent periods and peak bursts of data.

The burstiness of computer traffic called for a more efficient use of communication resources than what could be provided by either TDMA or FDMA. If each station was assigned a reserved low-speed channel, the transmission of a burst would take a long time. Furthermore, channel resources would be wasted during the long silent periods. The solution was a concept that was implemented by AlohaNET's random access protocol that is central to data networks: statistical multiplexing. A single high speed channel is shared among all the users, but each user only uses it only some of the time. While Alice is carefully examining the output of her program over a cup of tea, Bob could be uploading his data to the central computer for later processing. Later, the roles might be reversed as Alice uploads her new program while Bob is out surfing.

To make this multiplexing work, the team needed a mechanism that would allow the remote stations to learn about the failure of their initial transmission attempt (so that they could try again). This was achieved in an indirect way. Honolulu would immediately transmit through the downlink channel whatever it correctly received from the uplink channel. So if the remote station saw its own transmission echoed back by Honolulu, it knew that everything went well and Honolulu had received the transmission successfully. Otherwise, there must have been a problem, making it a good idea to retransmit the data.

Standards wars

"The wonderful thing about standards is that there are so many of them to choose from." Grace Hopper, as per the UNIX-HATERS Handbook (PDF) page 9 or 49.

By the end of the last century, two standards were competing head to head in the wireless local area network arena. The American alternative, developed by the IEEE, relied on simpler, more straightforward approaches. The alternative, proposed by the European Telecommunications Standards Institute (ETSI), was more sophisticated, featuring higher data rates and traffic prioritization for service differentiation. Vendors favored the easier to implement IEEE alternative, ignoring all optional features.

Obviously, a simpler approach had the advantage of a shorter time-to-market, which was critical for obtaining substantial market share and which paved the way for the ultimate success of the IEEE specification over the one standardized by ETSI. The IEEE 802.11 standard in question belongs to the 802 standard family that also includes IEEE 802.3 (Ethernet). As time passed, the IEEE 802.11 standard was refined to incorporate some features that were present in the early ETSI proposal, such as higher data rates and service differentiation.

Spreading the spectrum to avoid industry, science, and medicine

The whole point of a local area network (LAN) is that everyone gets to build their own local system without having to coordinate with anyone else. But for radio spectrum, tight regulation is the norm in order to avoid undue interference between different users. Wi-Fi sidesteps the need for such regulation, and the need to pay for radio spectrum, by shacking up with microwave ovens, cordless phones, Bluetooth devices, and much more in the unlicensed 2.4GHz industrial, scientific, and medical (ISM) band.

The original 802.11 specification published in 1997 worked at speeds of 1 and 2Mbps over infrared—though this was never implemented—or by using microwave radio on the 2.4GHz ISM band. Users of the ISM band are required to use a spread spectrum technique to avoid interference as much as possible. Most modulation techniques put as many bits in as few Hertz of radio spectrum as possible, so the available radio bandwidth is used efficiently. Spread spectrum does exactly the opposite: it spreads the signal out over a much larger part of the radio band, reducing the number of bits per Hertz. The advantage of this is that it effectively dilutes any narrow-band interference.

Frequency hopping

The simplest spread spectrum modulation technique is frequency hopping—obsolete now in Wi-Fi—which is exactly like it sounds: the transmission quickly hops from one frequency to another. Frequency hopping was invented several times over the first half of the 20th century, most notably by actress Hedy Lamarr and composer George Antheil. Through her first husband (of six), Lamarr became interested in the problem of how to guide torpedos in a way that resists to jamming and even detection by the enemy. Frequency hopping can help resist jamming, but quickly changing frequencies only works if the sender and receiver operate in perfect sync.

Antheil, who had created the composition Ballet Mécanique for a collection of synchronized player pianos, contributed the synchronization based on a piano roll. A 1942 patent for frequency hopping was granted to Lamarr and Antheil, but the significance of the invention wouldn't be recognized until the 1990s, when the wireless revolution got underway.

802.11 direct-sequence spread spectrum

The 1997 version of the 802.11 standard allows for 1 or 2Mbps direct-sequence spread spectrum (DSSS) modulation. Rather than moving a narrowband transmission across the 2.4GHz band, DSSS transmits a signal that occupies a much bigger portion of the band, but does so continuously. The underlying data signal in a DSSS transmission is of relatively low bandwidth compared to the DSSS radio bandwidth. Upon transmission, the data signal gets spread out and upon reception the original data signal is recovered using a correlator, which reverses the spreading procedure. The correlator gets rid of a lot of narrowband interference in the process.

It's a bit like reading a book at the beach. Sand on the pages will make the text harder to read. To avoid this, you could bring a book with big letters and then hold it at a larger distance than usual. The letters then appear at the normal size, but the sand grains are smaller relative to the letters, so they interfere less.

IEEE 802.11-1997 uses a symbol rate of 1MHz, with one or two bits per symbol encoded through differential binary or quadrature phase-shift keying (DBPSK or DQPSK), where the phase of a carrier signal is shifted to encode the data bits. ("Differential" means that it's not the absolute phase that matters, but the difference relative to the previous symbol. See our feature on DOCSIS for more details about how bits are encoded as symbols.)

The 1MHz DBPSK/DQPSK signal is then multiplied with a pseudorandom signal that has 11 "chips" for each symbol (for a chiprate of 11MHz). When transmitted over the channel, the spectrum is flattened because the chiprate is higher than the bitrate. When such a wide signal suffers narrowband interference, the impact is low since most of the transmitted signal remains unaffected. The receiver takes the incoming sequence of chips and multiplies it by the same pseudo-random sequence that was used by the transmitter. This recovers the original PSK-modulated signal.

802.11b: complementary code keying

Being able to transfer data wirelessly at speeds topping out not much higher than 100 kilobytes per second is impressive from the perspective that it works at all, but for real-world applications, some extra speed would be helpful. This is where 802.11b comes in, which specified 5.5Mbps and 11Mbps bitrates in 1999.

Simply increasing the symbol rate and, along with it, the DSSS chiprate, would allow for higher bitrates but would use more radio spectrum. Instead, 802.11b encodes additional data bits in the DSSS chipping sequence, using a modulation scheme called complementary code keying (CCK). The 5.5Mbps rate uses four 8-bit chipping sequences (encoding two bits), while the 11Mbps rate uses 64 (six bits). Together with the two DQPSK bits, this adds up to four or eight bits per symbol with a 1.375MHz symbol rate.

802.11a: orthogonal frequency-division multiplexing

For 802.11a (1999), the IEEE selected a very different technology than DSSS/CCK: orthogonal frequency division multiplexing (OFDM). DSSS is a single-carrier approach since there is a single high-rate (which means wideband) signal modulating the carrier. This has some drawbacks, as a wide-band channel presents different behaviors at different frequencies and needs to be equalized. It is far more convenient to send a large number of carriers and use low-rate (narrowband) signals to modulate each of them. It wasn't until powerful digital signal processors (DSPs) were available that the inverse fast fourier transform (IFFT) algorithm could be used to pack a large number of subcarriers very closely (in frequency) without too much mutual interference.

Rather than have a single signal occupy a whopping 22MHz of radio spectrum as with DSSS, 802.11a transmits 48 data-carrying subcarriers over 20MHz. Because there are so many subcarriers, the bit and symbol rates for each of them are quite low: the symbol rate is one every 4 microseconds (250kHz), which includes a 800-nanosecond guard interval between symbols. The low symbol rate and the guard time for each subcarrier are OFDM's secret weapons. These allow it to be isolated from reflections or multipath interference. 2.4GHz radio waves have a tendency to bounce off of all kinds of obstacles, such that a receiver will generally get multiple copies of the transmitted signal, where the reflections are slightly delayed. This is a phenomenon some of us remember from the analog TV days, where it showed up as ghosting.

A problem with radio transmission is that signals invariably "echo" across the radio band, with copies of the intended transmission also appearing on higher and lower frequencies, causing interference for neighboring transmissions. This makes it extremely challenging to transmit signals close together in frequency—which is of course exactly what OFDM does. It gets away with this because the carrier frequencies and symbol rates are chosen such that the spikes in the frequency band are "orthogonal": just where one transmission is most powerful, all other transmissions are magically silent. It's similar to how WWI era military aircraft were able to shoot through the arc of the propellor; both the propellor and the machine gun bullets potentially occupy the same space, but they're carefully timed not to interfere.

With techniques like BPSK, QPSK, 16QAM, or 64QAM (1, 2, 4, or 6 bits per symbol), this adds up to between 250 kbps and 1.5Mbps per subcarrier. The total raw bitrate for all 48 subcarriers is thus 12 to 72Mbps.

Unlike 802.11-1997 and 802.11b, 802.11a uses the 5GHz ISM band rather than the 2.4GHz ISM band. Although it took a while to sort out 5GHz availability around the world—which didn't exactly help 802.11a deployment—the 5GHz band has a lot more room than the cramped 2.4GHz band, with fewer users. 5GHz signals are more readily absorbed so they don't reach as far. This is often a downside, but it can also be an advantage: neighboring networks don't interfere as much because the signals don't pass through walls and floors that well.

Although 802.11a saw some adoption in the enterprise space, it wasn't a runaway success. What was needed was a way to bring 802.11a speeds to the 2.4GHz band in a backward compatible way. This is exactly what 802.11g (2003) does by using 802.11a-style OFDM in the 2.4GHz band. (though it adds additional "protection" mechanisms [discussed later] for 802.11b coexistence.)

802.11n: much, much, much more speed

Surprisingly, 802.11g didn't satiate the need for more speed. So the IEEE went back to the drawing board once again to find new ways to push more bits through the ether. They didn't come up with any new modulations this time around, but rather used several techniques that add up to much higher bitrates, and, more importantly, much better real-world throughput. First, the number of OFDM subcarriers was increased to 52, which brought the raw bitrate up to 65Mbps.

The second speed increase came from MIMO: multiple-input/multiple-output. With MIMO, a Wi-Fi station can send or receive multiple data streams through different antennas at the same time. The signal between each combination of send and receive antennas takes a slightly different path, so each antenna sees each transmitted data stream at a slightly different signal strength. This makes it possible to recover the multiple data streams by applying a good deal of digital signal processing. All 802.11n Wi-Fi systems except mobile phones support at least two streams, but three or four is also possible. Using two streams increases the bitrate to 130Mbps.

The third mechanism to increase speed is an option to use "wide channels." 40MHz rather than 20MHz channels allow for 108 subcarriers, which brings the bitrate to 270Mbps with 2x2 MIMO (two antennas used by the sender and and two antennas used by the receiver). Optionally, the guard interval can be reduced from 800 to 400 nanoseconds, adding another 10 percent speed increase, bringing the total bitrate to 300Mbps for 2x2 MIMO, 450Mbps for 3x3 MIMO, and 600Mbps for 4x4 MIMO. However, as the 2.4GHz band is already very crowded, some vendors only implement wide channels in the 5GHz band. Last but not least, 802.11n makes it possible to transmit multiple payloads in one packet and use block acknowledgments, reducing the number of overhead bits that must be transmitted.

Propagation and interference

Wired communication uses a tightly controlled medium for signal propagation. A wire has predictable properties and is, to a certain extent, isolated from the outer world. In wireless communication, the situation is exactly the opposite. The behavior of the transmission medium is quite unpredictable, and interference is a fact of life. Radio engineers resort to statistics to describe radio propagation—accurately modeling it is too complex. The channel attenuates (dampens) the signal and also introduces dispersion (spreading out) across time and frequency. Only under ideal circumstances in free, open space does the signal simply propagate from the transmitter to the receiver following a single path, making the problem amenable to analysis.

Obviously, the typical propagation conditions are very far from ideal. Radio waves hit all kinds of objects that cause reflections. The signal that arrives at the receiver is the sum of a large number of signals that followed different paths. Some signals follow a short path and thus arrive quickly. Others follow a longer path, so it takes them extra time to arrive. Drop a marble in a sink filled with water, and you will see waves propagating and reflecting in all directions, making the overall behavior of the waves hard to predict. In some places, two different fronts of waves add up, creating a stronger wave. In other places, waves coming from different directions cancel each other out.

Then there's interference. It's not unlikely that your neighbor also has some Wi-Fi equipment. And there are other devices, such as Bluetooth devices or microwave ovens, that operate in the same band as Wi-Fi. Obviously, all this interference adds to the above-mentioned problems to make it even more difficult to correctly detect and decode the signal we want.

The bottom line is that the signal quality that we are going to observe is rather unpredictable. The best we can do is to be ready to deal with signal quality heterogeneity. If the propagation conditions are good and interference is low, we can transmit at high data rates. In challenging propagation conditions, with lots of interference, we have to fall back to low data rates. This is why Wi-Fi devices offer different connection speeds and constantly adapt the data rate to the environment conditions.

Channels and overlap

Wi-Fi in the 2.4GHz ISM band uses 14 channels at 5MHz intervals. (Channels 12 and 13 are not available in North America and there are other country-specific peculiarities. Channel 14 is a special case.) However, DSSS transmissions are approximately 22MHz wide, so the 802.11b standard specifies that two transmissions should be 25MHz apart to avoid undue interference. This is the source of the common wisdom that you should use channels 1, 6, and 11 to avoid overlap. However, real life is much messier than what can be encapsulated in such a simple recommendation.

Receivers typically aren't as good as the IEEE wants them to be, with the result that a sufficiently strong signal can cause interference even if it's more than 5 channels away. But using channels that slightly overlap often works just fine, especially if the different transmitters are relatively far apart. So if you have four Wi-Fi base stations, it's probably better to use channels 1-4-8-11 in North America rather than have two base stations sit on the same channel in the 1-6-11 configuration. In Europe and many other parts of the world 1-5-9-13 is possible, which provides the 20MHz separation needed for 802.11g and 802.11n. In the 5GHz band where 802.11a—and sometimes 802.11n—lives, the channels are 20MHz wide, although they're still numbered in 5MHz intervals (so three quarters of the channel numbers remain unused). Some 5GHz channels are only available if they are not used for other purposes such as radar, so they're only selected when setting the channel selection to "auto." This also varies by country.

Of course, these days few of us have the luxury to slice and dice a wide open 2.4GHz band. It's not uncommon to see a dozen or more Wi-Fi networks in a residential neighborhood. Fortunately, just because they're there doesn't mean that all of these networks are going to interfere much. Most networks are idle most of the time—statistical multiplexing for the win. However, if you find yourself stuck between active Wi-Fi networks, it can pay to experiment a bit.

In some cases, the best choice is to select the same channel your neighbors are using. That way, your network and theirs will politely take turns transmitting. Waiting for your neighbor's transmissions will reduce your maximum throughput, but they also wait for you, so you get to transmit at maximum speed when it's your turn. Alternatively, if you choose a channel that heavily overlaps with a neighbor's network that is both strong and active, the two networks won't "see" each other and rather than take turns, generate interference when they both transmit at the same time, reducing the usable transmission rates.

Then again, in the case of a more remote network, overlapping can be the better choice than sharing the same channel. The added distance reduces the interference, so transmission speed remains high and your network won't wait for the other's transmissions to be completed. All else being equal, choose channel 1 or the highest available option. That way, overlap can only come from one direction.

Who gets to transmit: media access control

With only one radio channel for sending and receiving and multiple devices that may have data to transmit, obviously, some protocol is needed for the devices to play nice and take turns transmitting. Imagine that you are participating in a conference call. Having all the participants in the call talking at the same time is not very effective. Ideally, the different participants that have something interesting to say should take turns speaking. Those that have nothing to say can simply remain silent.

A natural courtesy rule is not to interrupt each other—in other words, to wait for the other party to finish before talking. Therefore, the different parties listen to medium before talking, and refrain from saying anything when someone else is speaking. In wireless network jargon this is called "carrier sense multiple access" (CSMA). CSMA solves part of the problem, but CSMA still allows two devices to start transmitting at the same time after a previous transmission ends, creating a collision. And unlike with wired Ethernet, Wi-Fi devices can't detect collisions as they happen.

The solution to this problem is that, after waiting until an ongoing transmission has finished, stations that want to transmit then wait for an additional random amount of time. If they're lucky, they'll choose a different random time, and one will start the transmission while the other is still waiting, and a collision is avoided. This solution is aptly named "collision avoidance" (CA). The 802.11 media access control layer combines CSMA and CA, shortened to CSMA/CA. Devices also observe a random time between packets if they have multiple packets to transmit, in order to give other systems a chance to get a word in. The implementation of CSMA/CA used in IEEE 802.11 is called the "distributed coordination function" (DCF). The basic idea is that before transmitting a packet, stations choose a random "backoff" counter. Then, as long as the channel is deemed idle, the stations decrease their backoff counter by one every slot time. The slot time is either 9 or 20 microseconds, depending on the 802.11 version at hand. Eventually, the backoff counter reaches zero and at that point the station transmits its packet. After the transmission, the station chooses a new backoff value and the story repeats itself. This way, collisions only occur when two stations choose the same random backoff value.

If the packet doesn't make it to the intended receiver, it's retransmitted. Of course, a transmitter needs to know whether a transmission has been successful or not to decide whether a retransmission is necessary. For this reason, a IEEE 802.11 station immediately sends back an acknowledgment packet after receiving a regular (unicast) data packet. (This is not done for multicast/broadcast packets because there is more than one receiver. Those packets are simply sent at a very low rate.) If a transmitter fails to receive this acknowledgement, it will schedule the packet for retransmission. Once in a while, an acknowledgment gets lost, so an unnecessary retransmission happens, leading to a duplicated packet.

Fairness

DCF is a "fair" protocol in the sense that all the stations have the same opportunity to transmit. This concept of fairness results in a somewhat bizarre behavior which is known as the "performance anomaly," which boils down to "the slowest sets the pace." If you are right next to your access point and enjoy good channel conditions, you can wirelessly communicate at a relatively high speed, say 54Mbps. Now, if someone else connects to the same access point from a remote room or office, he would use a slower data rate, say 6Mbps. And—surprise!—the speed you get drops to almost what you would get if you were using a 6Mbps rate yourself. The reason? DCF is just being "fair," and you both will perceive a similar performance. The number of transmission attempts is going to be the same for both. The only difference is that the remote device will occupy the channel for a long time (since it's using a low data rate) while the closer device will occupy the medium for a short time each transmission attempt. It's a bit like sharing a lane with a big, slow truck. Some may argue that this is a very particular concept of fairness.

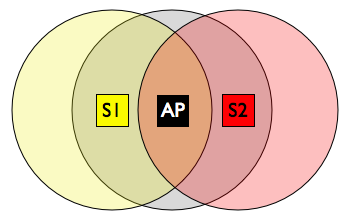

CSMA assumes that all stations can see each other's transmissions. This is not necessarily true. It's possible that two stations interfere with each other even though they can't detect each other's transmissions. For instance, when there is an obstacle between the two transmitting stations that prevents them from hearing each other and they both transmit to the same access point which has a clear channel to each of them. Or they may simply be out of range of each other. This is called the "hidden node problem."

The hidden node problem. Say station 1 starts to transmit. Station 2 doesn't see station 1's transmission, so it also starts to transmit. The access point hears both transmissions but fails to decode either of them because they overlap and interfere heavily with each other. The access point doesn't send any acknowledgments, so the two stations retransmit and collide again. This continues and the effective throughput of the network is almost zero.

This problem can be alleviated using a four-way handshake, which consists of four different messages: request-to-send (RTS), clear-to-send (CTS), data, and acknowledgement (ACK). A station which is ready to transmit sends an RTS which contains a field that indicates how long the channel has to be reserved. Upon correct reception, the intended receiver—the access point—issues a CTS message that also contains the duration of the channel reservation. Ideally, this CTS will be received by all the potential interferers that are in the transmission range of the AP, which all defer their transmission to prevent collisions, so the the station that had initially issued the RTS transmits its data packet without incident and the access point sends an acknowledgment.

Since the RTS, CTS, and ACK packets are short signaling packets, the chances that those encounter errors and collisions is low. If a collision occurs between two RTS packets, it will be a short collision so not too much channel time is wasted. By using the four-way handshake, collisions among long data packets are avoided. But the extra RTS/CTS packets add additional overhead, reducing the channel time available for user data transmission. So RTS/CTS is only enabled for long packets, and is often disabled completely.

Overhead and the MAC bottleneck

Ever wondered why you can hardly squeeze 30Mbps out of your 54Mbps hardware? Blame the MAC bottleneck. First, transmissions are delayed a random amount of time which necessarily means that there are moments when the medium is idle and, therefore, channel time is wasted. Channel time is also wasted when a collision occurs. And even successful transmissions come with a certain amount of overhead. Wireless transmissions are preceded by training sequences or preambles that allow the receiver to adapt to the channel conditions and to synchronize with the timing of the incoming data symbols. The acknowledgement also represents some overhead. Additionally, switching from transmission to reception and vice versa is not free; the hardware needs some time to reconfigure. It also doesn't help that Wi-Fi transmits entire Ethernet frames rather than just the IP packets inside Ethernet frames.

All this overhead is acceptable for long packets, but as the packets become shorter, the fixed amount of overhead per packet gets relatively larger. The problem is that as physical data rates increase as the technology evolves, the packets effectively become shorter so the relative overhead increases. For a short packet, the time wasted in the form of overhead can be larger than the time that is used for actual data transmission! This is the MAC bottleneck problem: at a given point, no matter how much the physical data rate increases, the user will not enjoy any significant performance advantage.

The problem was particularly acute with the release of the IEEE 802.11n standard amendment that uses MIMO, wider channels, and more efficiency in general to accommodate physical layer speeds up to 600Mbps. Despite this impressive number, the MAC bottleneck would have limited the real-world performance to less than that of 100Mbps wired Ethernet. This was clearly unacceptable. The solution was to aggregate multiple packets into larger packets with the consequent reduction in terms of relative overhead. Each time that a station obtains access to the channel, it transmits several aggregated packets, and pays the overhead tax only once. This simple scheme was crucial to avoid the MAC bottleneck and break the 100Mbps real-world throughput barrier in 802.11n.

Protection against slow neighbors

It is often claimed that 802.11 networks operate at the rate of the slowest device that is connected. Fortunately, that's not true, although the performance anomaly mentioned above kicks in when the slower devices start to transmit. Also, in a mixed mode network, the faster devices do have to slow down to varying degrees to coexist with older devices. This started with 802.11b, which introduced shorter headers compared to the original 1 and 2Mbps 802.11. But those shorter headers can only be used when all the systems are 802.11b systems. More problematic is the coexistence between 802.11b and 802.11g, because 802.11b systems see OFDM signals as meaningless noise.

So if one or more 802.11b systems are present on a channel, OFDM transmissions are "protected" by DSSS RTS/CTS packets (or just CTS packets). The CTS packet announces a duration that encompasses the DSSS CTS packet, the OFDM data packet, and the OFDM acknowledgment for the data packet so DSSS stations remain quiet during that time. Protection kicks in if any DSSS stations are detected—even if they're part of some other network down the street at the edge of wireless range that happens to use the same channel. This protection seriously cramps 802.11g's style; throughput drops by about 50 percent. But without it, DSSS systems may transmit in the middle of an OFDM transmission, to the detriment of both.

Because 802.11a, g, and n all use OFDM modulation, backward compatibility is easier here: part of the MAC header is sent at 802.11a/g speed so older devices know the duration of the transmission. The remaining header fields and the data are then transmitted at 802.11n speeds. Because 802.11a networks are relatively rare, it's usually no problem to run in 802.11n-only mode on the 5GHz band, but doing so on the 2.4GHz band may not be appreciated by your 802.11g neighbors.

Standardization and certification

So far, we've used the terms Wi-Fi ("wireless fidelity", a play on hi-fi) and IEEE 802.11 interchangeably, but there is a difference. The IEEE is the standardization body in charge of the IEEE 802.11 standard, using long and tedious procedures. For instance, work on the IEEE 802.11n amendment started back in 2002. By 2007, most of the technical details were settled, but it took until 2009 before the new version of the 802.11 standard was official. It doesn't help that the companies that work together in the IEEE are competitors in the marketplace, and that it can be a huge windfall for a company to have its patented technology become part of a standard.

The Wi-Fi Alliance, on the other hand, is an industry consortium that certifies that the hardware is compliant with a specification and can interoperate. The Wi-Fi Alliance performs some tests and (after payment of the relevant fees) certifies a product as being compliant with the specification. Certified products carry a logo that identifies them as standards compliant. Specifications obviously follow the 802.11 standard, but may sometimes require the implementation of certain options, or even non-implementation of deprecated ones, such as WEP.

Security

If you connect your computer to your home router using that trusty UTP cable, it's highly unlikely that your nosy neighbor can spy on your browsing habits. For a wireless connection, the situation can be very different. Radio waves do not recognize property limits; anyone can purchase a directional antenna and collect wireless data from a safe distance. Your cheap neighbor may even take advantage of your broadband connection instead of paying for his own cable or ADSL connection. To avoid these eventualities, the first versions of the Wi-Fi standard came with "wired equivalent privacy" (WEP) to secure the wireless network. Unfortunately, WEP doesn't exactly live up to its name. WEP was developed back in the days when the US government didn't want strong encryption to be exported, so WEP originally used 40-bit key sizes, which is intentionally way too short to offer much more than an illusion of security. (Later versions support 104-bit keys.)

The 40 or 104 key bits are combined with a 24-bit initialization vector for a total of 64 or 128 bits. The encryption algorithm in WEP is the Rivest Cipher RC4. However, over the years many RC4 weaknesses have been found, to the degree that WEP can now be cracked in minutes. This prompted the Wi-Fi alliance to come up with a new security framework called Wireless Protected Access (WPA), while the IEEE started to work in a new security standard called IEEE 802.11i.

The goal of WPA was to prop up Wi-Fi security without having to replace hardware, while the IEEE's goal was to build something fundamentally better. WPA introduces the Temporal Key Integrity Protocol (TKIP) that reuses the RC4 capabilities of existing Wi-Fi cards, but creates a new encryption key for each packet. This avoided most of the then-known RC4 vulnerabilities. WPA2 is the Wi-Fi Alliance's name for IEEE 802.11i, which uses CCMP, the "counter mode with cipher block chaining message authentication code protocol" (say that three times fast). CCMP is based on the widely used AES encryption algorithm.

Both WPA and WPA2 are available in Personal and Enterprise forms. WPA2 Personal uses a 256-bit pre-shared key (PSK), which is used negotiate the actual packet encryption keys. Obviously those who don't know the PSK aren't allowed access to the network. WPA2 Enterprise uses a plethora of additional protocols to let the user provide a username and password, which are checked against a remote authentication server. Always use only WPA2 with CCMP/AES unless you absolutely need compatibility with very old equipment.

Although wireless communication, especially on the frontier-like ISM band, is fraught with hidden dangers, the vendors working together in the IEEE and Wi-Fi Alliance have managed to successfully shepherd wireless LAN technology from humble beginnings to the reasonably reliable high performance we enjoy today. Every time an obstacle presented itself, new technology was introduced to circumvent it, while the quickly growing market kept prices under pressure. What more could we ask for?

Read more: